What magical trick makes us intelligent? The trick is that there is no trick. The power of intelligence stems from our vast diversity, not from any single, perfect principle.

Marvin Minsky

The Frontiers of Increasing AI Performance

There has been a lot of work done on pushing the performance of machine learning with the structure of transformers + scaling in the past few years. Alongside this, AI has continued to progress performance with things like more advanced prompt engineering, chain of thought, expanded context windows, data-calling pipelines like RAG, mixture of experts models, and most recently merged models (Frankenmerges being my favorite naming convention).

Alongside the consensus of Transformer-driven approaches, small parts of the AI world have turned to look at novel architectures like those used by HYENA, RWKV, Mamba, and more that aim to solve many of the difficulties with pure play Transfomer architecture.

All of these are an attempt to have better, faster, and larger scopes of knowledge and/or “more correct” knowledge, alongside more efficiency. Despite the improvements, it is debatable if we have seen creativity really emerge and there have been few examples of highly novel insights generated using LLMs.

Put simply, AI is great at getting to binarily correct answers quickly, but it’s not clear it has a unique performance edge to humans in creatively minded and/or non-deterministic problems.

Researchers in MAD have even noticed that regardless of the pushing from a user, “Once the LLM has established confidence in its answers, it is unable to generate novel thoughts later through self reflection even if the initial stance is incorrect.” They call this Degeneration of Thought.

Like many people over the long history of AI, I am left wondering if the solution for unlocking creativity and increasing performance can come from a more “biological” approach. Will true AGI come not from distilling all of human knowledge down to a series of weights, but from observing the debate and conversation amongst brilliant entities?

Enter Collective Intelligence (CI) and Multi-Agent Debate (MAD).

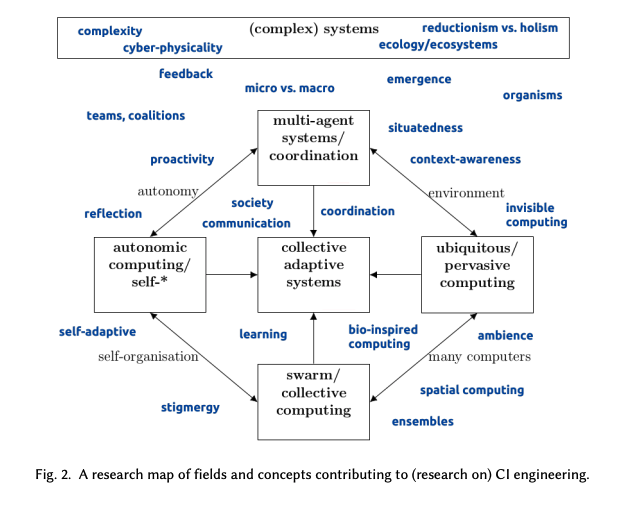

Collective Intelligence: The Core Premise and its Evolution

Often, decisions made by an individual may lack the precision seen in decisions formed by the majority. Collective intelligence is a kind of shared or group intelligence, a process where the opinions of many are consolidated into decisions. It arises from the collaboration and competition amongst various entities. This intelligence manifests in bacteria, animals, humans, and computer networks, appearing in various consensus-based decision-making patterns…

…By harnessing communication and evolution within an agent society,

The Rise and Potential of Large Language Model

it becomes possible to simulate the evolution observed in biological societies, conduct sociological experiments, and gain insights that can potentially advance human society.

Based Agents: A Survey

There are many ways in which the AI community describes collective intelligence, all filled with various naming conventions and nuances. We view Collective Intelligence as the broader study of a variety of opinions being consolidated into a decision, while Multi-Agent Debate is a mechanism that serves LLMs well to engage in this. 1MAD dates back to the 1980s, with this book by Michael Woolridge from 2002/9 being a great moment in time look at the promises of the concept. It definitely is, 100% not not available on LibGen if you’re interested in reading it.

We believe these all surround the premise of using a variety of entities which are embodied with specific principles, data, viewpoints, etc. in order to come to a nuanced answer or collection of insights. These agents are typically managed by an AI Orchestrator of sorts, which is tasked with a higher level set of goals/criteria to accomplish and acts to advance the discussion.

I originally started experimenting with MAD through more sophisticated prompts enabled by frontier LLMs expanding larger context windows. The first example was using a prompt structure called HIVE (Highly Intelligent Virtual Entities), which you can see the results of in the example below if you expand the text.

Expand here for HIVE Prompt Example

*HIVE PROMPT*

Context: I’d like to start a company that uses AI to analyze audio for early detection of diseases. I want it to be big enough to generate at least $250M of revenue per year after 7 years. I’d like to know what products i should start with, what research papers supports being able to create those products, and who my first 5 customers should be. I’d also like to know how much to charge them and why.

Result:Initiating the HIVE MIND session with 5 Virtual Entities to solve the problem:

Evelyn: AI Technology Specialist (Personality traits: Innovative, Analytical, Direct)

Marcus: Healthcare Industry Expert (Personality traits: Experienced, Frank, Strategic)

Leon: Financial Analyst (Personality traits: Meticulous, Realist, Blunt)

Sophia: Legal and Compliance Advisor (Personality traits: Thorough, Ethical, Pragmatic)

Nina: Consumer Behavior Analyst (Personality traits: Insightful, Creative, Honest)

Evelyn: We’re looking at integrating AI in audio analysis for early disease detection. This is cutting-edge, but let’s not forget the importance of a robust AI model. I suggest we start by identifying diseases that have distinct audible markers. Research papers have shown potential in detecting respiratory diseases from cough sounds. Let’s focus on this.

Marcus: Agreed, Evelyn. Respiratory diseases are a good start. However, the healthcare sector is heavily regulated. We need to ensure that our product aligns with medical standards and has the necessary certifications. Partnerships with medical institutions for clinical trials could be pivotal.

Leon: From a financial perspective, we must consider the development costs against our seven-year, $250M revenue goal. Investing in solid R&D is crucial, but we need a clear path to profitability. We should consider a subscription-based model for continuous revenue flow.

Sophia: While you’re all focusing on the product and finances, don’t underestimate regulatory hurdles. We need to navigate FDA approvals and data privacy laws, especially HIPAA in the US. It’s not just about creating a product but ensuring it’s legally compliant.

Nina: Understanding our customers is key. We should target healthcare providers who are early adopters of technology. They’ll be more open to integrating our solution into their practice. As for pricing, it should reflect the value we provide in improving diagnostic accuracy and saving time, but we must also consider what the market can bear.

(PAUSE)

How would you like the Virtual Entities to proceed?

Continue

Summary

Devils Advocacy

Ask the User Questions

Or provide your own input to continue the conversation

*END EXAMPLE* (a user would continue to push the conversation further here)

The core premise of HIVE is that you have a hive mind of 5 entities that can discuss and debate a given task with prompts built in to continue to further the conversation via user input. Each entity is given a first name, a background, and a number of personality traits that either the user programs or the LLM will automatically create based off of the given task. It works decently well at encouraging and simulating what I would deem predictable but at times intriguing debate.

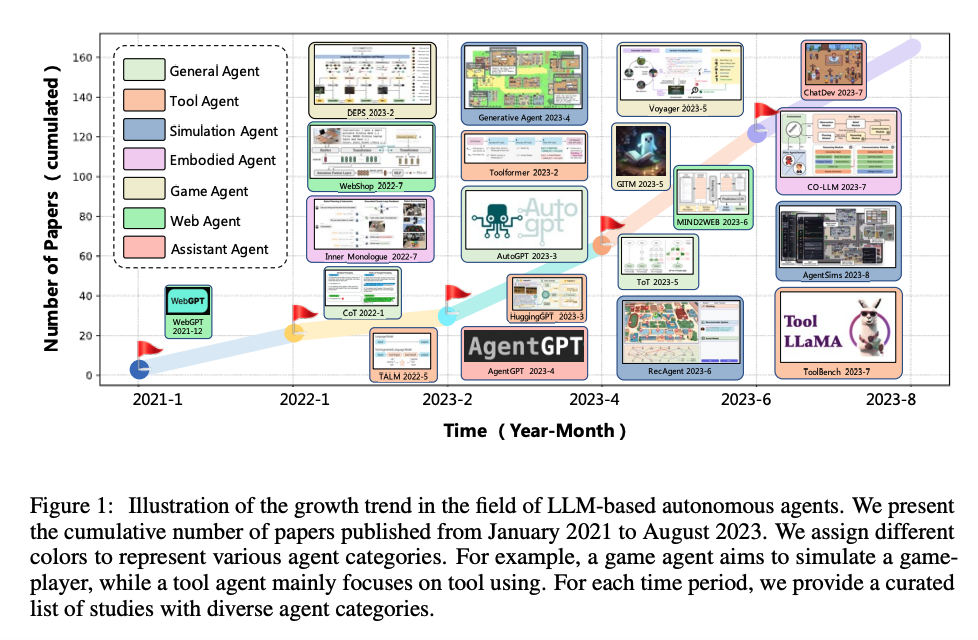

As we moved away from simple prompting, we began to see an explosion of more advanced LLM-based autonomous agents across a variety of agent types armed with various types of infrastructure.

An Explosion of Research and Use-Cases in 2023

As many started to notice the power of MAD, new technical implementations of the concept began to accelerate. Perhaps the most notable has been AutoGen from Microsoft, as well as CrewAI, both of which aim to provide core infrastructure to enable multi-agent debate. We’ve also seen adjacent projects such as MemGPT or SocialAGI, which create self-updating models for the user with “infinite” context, as well as RAISE which uses a modular scratchpad approach for adjusting agents.

A large portion of this got on my radar due to Generative Agents: Interactive Simulacra of Human Behavior which created a sandbox environment where agents autonomously took actions, remembered and reflected on them, and then stored the insights as memories.

People were quick to fork the repo, leading to various experiments and boundaries being pushed to understand just how much we could learn from these agents collaborating and living in this world. That said, despite it being incredibly fun, the environment was a bit too open to drive meaningful results for now.2I recommend forking this and running a local LLM to minimize costs. It’s a fun experiment.

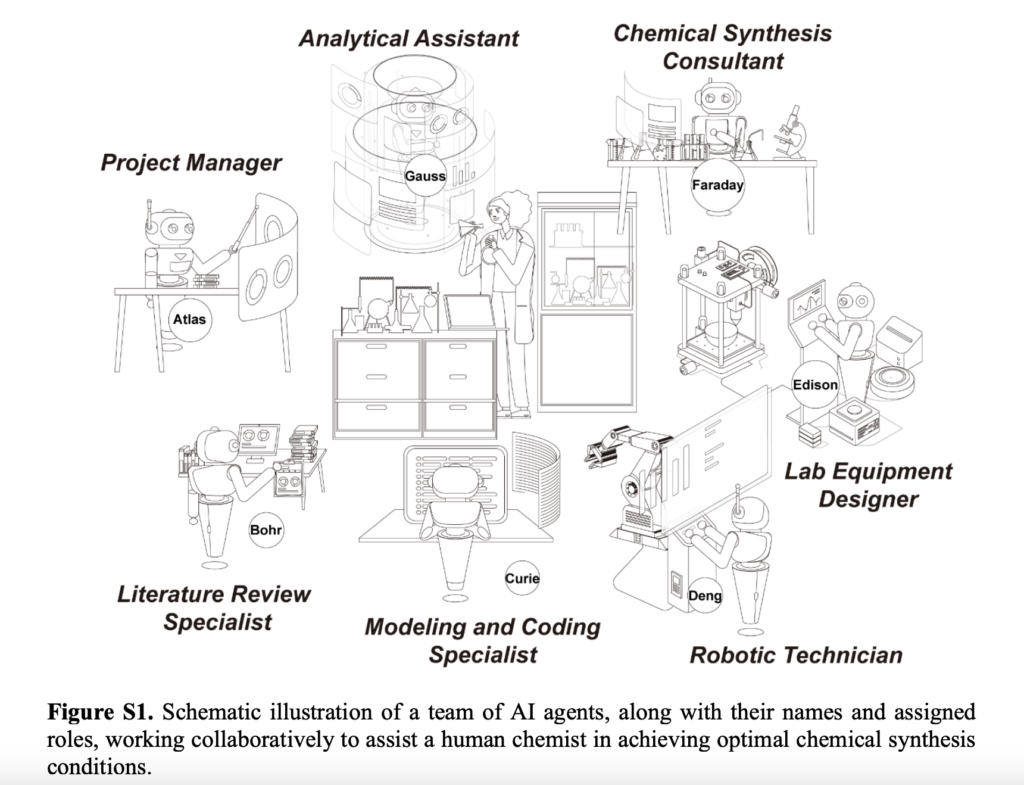

A variety of papers built upon this idea (and many others of biological intelligence) to build out more focused use-cases to Collective Intelligence and MAD systems.

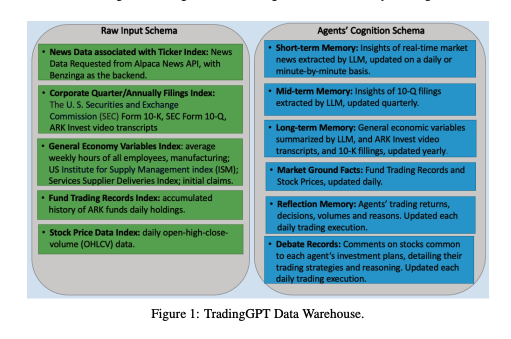

TradingGPT effectively simulated a hedge fund by orchestrating distinct characters using layered memory in agents and debate to build trading strategies and make investment decisions.

Their abstract describes this framework:

The efficacy of GPTs lies in their ability to decode human instructions, achieved through comprehensively processing historical inputs as an entirety within their memory system. Yet, the memory processing of GPTs does not precisely emulate the hierarchical nature of human memory, which is categorized into long, medium, and short-term layers. This can result in LLMs struggling to prioritize immediate and critical tasks efficiently. To bridge this gap, we introduce an innovative LLM multi-agent framework endowed with layered memories.

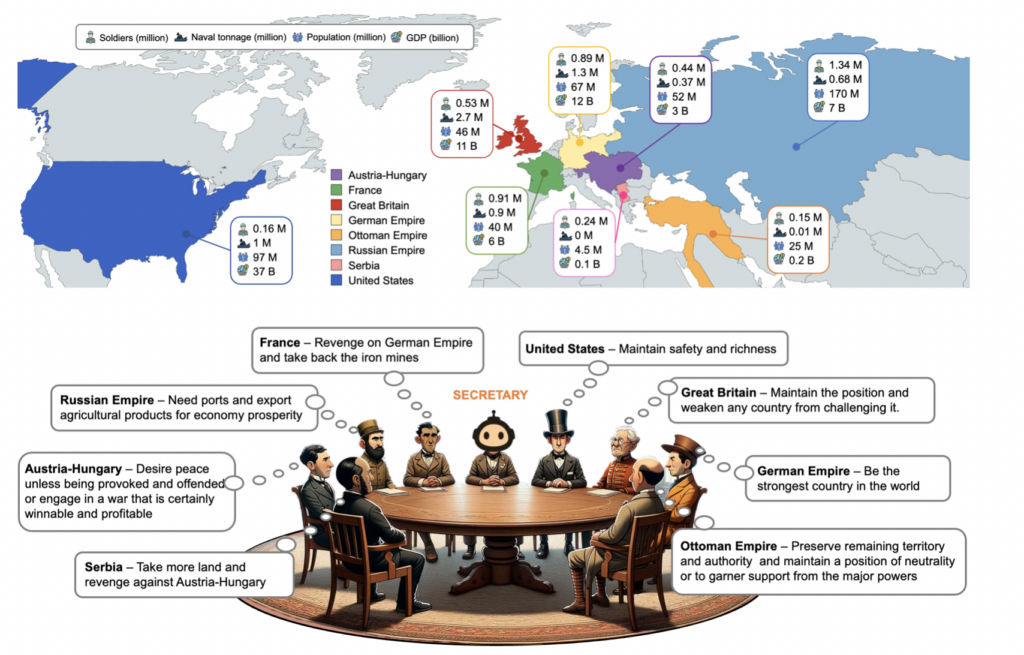

Through 2023, more research emerged ranging from simplistic/dressed up CoT prompting mechanisms to advanced retrieval dynamics to novel memory architectures, and much more. These use-cases include Design, Education, Medicine, Chemistry labwork, Social Science Research, Scientific Paper Review, and even World Wars, among many others.

For further exploration I highly recommend survey papers including this one from September 2023, this other one from September 2023, and this one from January 2024.3I’m sure there are others but this is like 300 papers to read so…enjoy

The main underpinning across all of these is that they were able to increase performance either on a relative basis to the model4While not purely multi-agent debate, AutoACT for example used a division of labor strategy to get LLaMa-2-13b to perform close to ChatGPT 3.5-Turbo or an absolute basis. In addition, they allow humans and models to reflect upon outputs from LLMs in a more thoughtful way due to the debate modality instead of the prompt -> answer modality.

A note on Compute Constraints

A perpetual question that comes up in discussing CI is how much compute will be required to push the frontier. My view is we can look towards frontier models broadly, which are grappling with similar trade off questions on a weekly basis.5Yes OAI, Anthropic, and META are going to throw waves of H100s and data at frontier models, but at the same time Microsoft is pushing on models like Phi and others. We are lucky that we get massive R&D spend on both sides with some nice OSS contributions on smaller models.

MoE models and merged model architectures represent significant strides in this direction.6MoE models, by activating only the relevant ‘experts’ for specific tasks, sidestep the computational exorbitance of monolithic models. Similarly, merged models optimize performance by intelligently amalgamating neural pathways. Both of these architectural innovations perhaps point to the idea that achieving breakthrough performance is as much about strategic structural innovation as it is about raw computational power, ensuring every computational unit is maximized. And if we believe that, it’s not clear to me that we won’t have similar structural experiments with single MoE models optimized for debate all the way to using multiple models at once (and properly syncing the learning of them) to do MAD.

The Progression of Collective Intelligence

Cambridge Student: “To get to AGI, can we just keep min maxing language models, or is there another breakthrough that we haven’t really found yet to get to AGI?”

Sam Altman: “We need another breakthrough. We can still push on large language models quite a lot, and we will do that. We can take the hill that we’re on and keep climbing it, and the peak of that is still pretty far away. But, within reason, I don’t think that doing that will (get us to) AGI. If (for example) super intelligence can’t discover novel physics I don’t think it’s a superintelligence. And teaching it to clone the behavior of humans and human text – I don’t think that’s going to get there.And so there’s this question which has been debated in the field for a long time: what do we have to do in addition to a language model to make a system that can go discover new physics?”

Sam Altman & OpenAI | 2023 Hawking Fellow | Cambridge Union

As we continue to progress in AI, there are both societal and technologically important impacts Collective Intelligence and MAD may have.

The Selection of agents for debate

We as individuals might train agents to represent our own interests with both private and external data to make or simulate decisions on our behalf. This may be viewed as a clone of ourselves, or it may be viewed as our copilot, chief of staff, or assistant, depending on how we orchestrate and feed it data and context.

We also will use external-only facing agents like those made by Delphi. This will lead to more sophisticated merged agents of various entities that can then be related within a given persona. This is in some ways a subset of “traditional” collective intelligence where the cumulative intelligence of given domain experts create a master entity to engage in debate with other master entities, like having an economist (which is comprised of knowledge from many economist agents) debate a trader in public market analysis.7This psychologically is also interesting because it gets at the idea that “experts” perhaps are not ideal for debating complex and uncertain topics. Maybe our blind spots are what enable us to be optimistic or think creatively?

The role of humans

Humans may follow a progression of usefulness in tasks in a Collective Intelligence driven world until ultimately serving as data input instead of arbiters of truth.

First, humans will play a role as the ultimate orchestrator of a given task. We experienced this already with the usage of HIVE, as it implements a human feedback mechanism to steer the debate as well as with custom instructions in ChatGPT where the model suggests further exploratory questions based on context and then the user chooses which direction to go.8I implemented this in my Research Paper Reader GPT

While today these suggestions are ideal ~30% of the time, we expect LLMs will become more proficient at iterating suggestions. This skillset will progress into AI orchestrators that will drive conversations at a higher % than humans in some form, before eventually advancing the vast majority of multi-agent debate towards a form of self-play.

Self-Play, Self-Reward Language Models, & Synthetic Data

Alex Irpan recently published one of the best posts on the state of AI I’ve read in awhile.

In it, he discussed the OpenAI team’s continual pursuit of self-play and the immense value if done correctly to progressing intelligence:

A long time ago, when OpenAI still did RL in games / simulation, they were very into self-play. You run agents against copies of themselves, score their interactions, and update the models towards interactions with higher reward. Given enough time, they learn complex strategies through competition. At the time, I remember Ilya said they cared because self-play was a method to turn compute into data. You run your model, get data from your model’s interactions with the environment, funnel it back in, and get an exponential improvement in your Elo curves. What was quickly clear was that this was true, but only in the narrow regime where self-play was possible. In practice that usually meant game-like environments with at most a few hundred different entities, plus a ground truth reward function that was not too easy or hard, and an easy ability to reset and run faster than real time. Without all those qualities, self-play sputtered and died with nothing to show for it besides warmer GPUs.

While self-play has canonically been built around using reinforcement learning (RL) in environments with clearer reward functions and outcomes, it’s likely similar approaches could continue to push forward MAD.

On queue, Meta recently published Self-Rewarding Language Models (SR-LMs) which enables a model to iteratively evaluate and tweak its responses based on certain criteria. Unlike traditional self-play in games, the goal here is not just to win a game but to improve the quality and relevance of text generation and understanding with a synthetic data feedback loop.9The paper didn’t explore more than 3 iterations, so there could even be scaling laws that show more iterations improve performance even more.

It doesn’t take much creativity to see how this could progress in both the form of individual agent generation as well as debate quality with SR-LM like models as over time, the agents involved in the debate could become more adept at constructing arguments and counterarguments, learning from previous interactions just like they learn from their self-generated text. 10There is some risk here of ‘reward hacking’ or echo chambers, where the system might reinforce its biases or exploit loopholes in the reward mechanism in the criteria

The mechanism of effectively dueling agents shouldn’t be new to those of us who were fascinated by Generative Adversarial Networks over the past decade. Irpan draws a similar conclusion with respect to these types of behaviors entering into language models.

Language models in 2024 remind me of image classification in 2016, where people turned to GANs to augment their datasets. One of my first papers, GraspGAN, was on the subject, and we showed it worked in the low-data regime of robotics. “Every image on the Internet” is now arguably a low-data regime, which is a bit crazy to think about.

These concepts align well with what I have been calling a second revolution in synthetic data, as we’ve seen research labs like Nous Research use synthetic data to train and fine-tune a variety of models, as well as larger labs increasingly showing very strong performance with their own synthetic data.

Moving Forward

From Debate to Simulation

It is important to remember that MAD in its current form is very skeuomorphic in structure. Just as we have seen organizational structures evolve over time with new roles tasked with new objectives, enabled by new technologies, it’s likely that agent debate will progress in ways that aren’t pattern-matched only to how our current world works.

While this means we could eventually have previously un-thought of roles and debate structures, in the interim, we could bring in novel types of environments that impact how agents move, behave and think. We also could have different types of emotions impact behaviors. This paper, talks about emotional intelligence being integrated into LLMs showing a 10% performance uplift. One could imagine companies like Hume.ai expanding the boundaries of this.

We can naturally push even further towards longer-term simulation of events as we simplistically saw in WarAgent. The implications of this are obvious but it’s worth asking the question If we can simulate how large groups of intelligent people make decisions through sophisticated MAD, and understand confidence variables of these end states through Collective Intelligence principles, have we not built a future prediction machine?11Of course there is complexity with limiting hallucinations. This paper talks about some of the downfalls of “out of context” data asks and the hallucinations. That said, perhaps the hallucination if described or reasoned would be helpful “i don’t know but based on this person…” anyways, this may be a stretch but let me dream.

A Note on Scaling Laws & Band-Aids

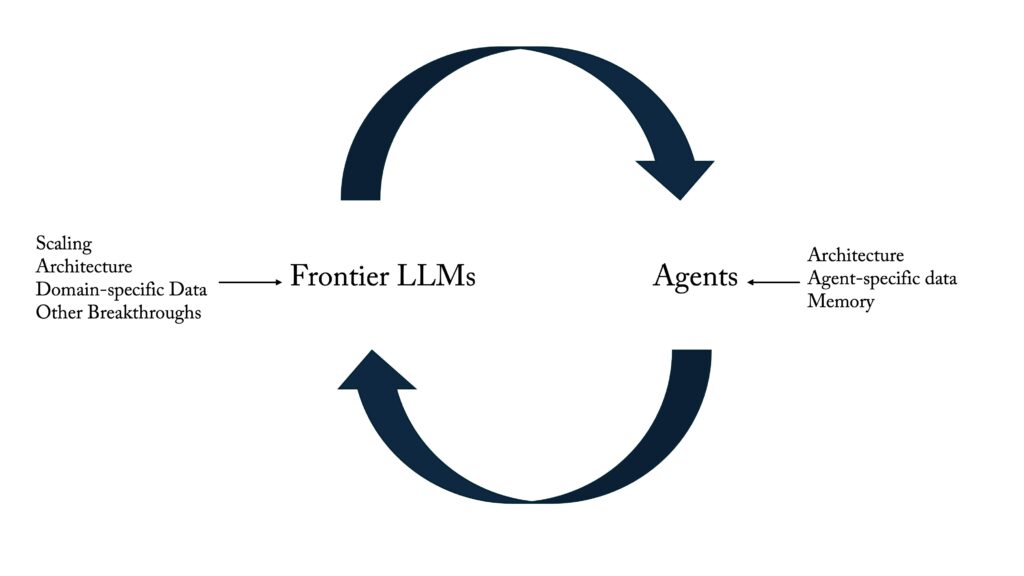

There is an open question about how to think about Collective Intelligence as it relates to frontier LLM development as we canonically understand it today. Earlier, we talked about a lot of the core approaches to scaling performance as well as adjacent shots on goal to solve LLMs shortfalls.

My gut tells me that there is likely some sort of symbiosis between these two concepts. Put more specifically, as scaling continues to progress creating better LLMs, which will enable better agents, there will also be a feedback loop of adjacent research that accelerates agent ROI across a variety of use cases. Whether or not this is owned by large AI labs like OpenAI, Anthropic or Deepmind, or we see Collective Intelligence focused AI labs such as Sakana own this, is to be seen.

There are perhaps some parallels to draw from AVs which saw dogmatic lock in on the core technical approaches, only to have to pivot many years later once they reached limits on either performance or economic realities on their approaches.12You can read about this more in On Inflection Points. The difference however could be that OpenAI has shown strong malleability in technical approaches, shifting from DRL to Transformers in their lifetime.

The innovations that could come through to inflect performance on each of these are somewhat similar across scaling, architectural shifts, and data. However, if we progress towards the approach of trying to bring “embodiment” into agents that then navigate complex idea mazes, it’s likely the nuance is in how one achieves this across the entire stack from bringing in out-of-distribution data from the underlying base models, all the way to how we enable function calling for a given agent, among many other things.

There is still much to understand here, however it is a worthy discussion as we have already seen many areas that people thought would be standalone business-worthy get subsumed by better research improving shortfalls of frontier LLMs.13Hello context window expansion.

CI -> MAD -> AGI -> ASI

My original hypothesis was that Collective Intelligence and more specifically Multi-Agent Debate could be what leads us towards a form of AGI across multiple more esoteric, but impressive, tasks. Over time, it became clear that there are many avenues where these could actually propel us to some form of artificial super intelligence (ASI) due to a possible ability to generate novel ideas at a rate that humans cannot, and perhaps navigate the various branches of the future through simulation done by superhuman agents.

Doomers like to say that AI will replace humans. Optimists say AI will enable humans to do more than ever before. But perhaps what humanity does and what AI do is they bring together a new type of way to explore our ever-growing collective intelligence.

Machine learning reimplements human intelligence.

Language models emulate humanity’s collective intelligence.

On the future of language models

Thanks to Kevin Kwok for thoughts on this piece.

Recent Comments